Zombie Philosophy

A groundbreaking new physics paper is out. Roli, Jaeger, and Kauffman (2022) claims to have proven the invalidity of quantum mechanics. Moreover, it also proves that all known potential candidates for a replacement; string theory, loop quantum gravity, even Stephan Wolfram's graph-theoretical approach; are equally invalid.

Strangely, it was not published in a physics journal; it was published in the philosophy section of "Frontiers in Ecology and Evolution". And the title really undersells the impact of its conclusions: "How Organisms Come to Know the World: Fundamental Limits on Artificial General Intelligence"

To understand how this is a physics paper, we must consider this line:

This leads us to our central conclusion, which is both radical and profound: not all possible behaviors of an organismic agent can be formalized and performed by an algorithm—not all organismic behaviors are Turing-computable. Therefore, organisms are not Turing machines.

Starting from this premise, there's a simple two-step disproof of quantum mechanics.

#1: The brain is a physical system

Well-known philosopher and rock singer David Chalmers coined the term "the hard problem of consciousness" to distinguish it from the much easier problem of predicting the brain's externally-observable behavior.

The hard problem of consciousness is the question of why qualia exist, and why we have the specific qualia that we do. How do you know that the people around you aren't all p-zombies? The problem is hard because it's a philosophical one, not an empirical one. A p-zombie, by definition, behaves exactly the same as a human with qualia. You could hook a mind-reading machine up to them, which prints out the words they're about to say before they actually say them, and you still couldn't know for sure that they're actually experiencing those words in the same way that you are. So there's no experiment that could be performed, even in principle, to tell them apart and solve the hard problem of consciousness.

Luckily, the paper in question is not talking about the hard problem of consciousness. They helpfully point this out by mentioning that they're discussing the possible behaviors of an artificial agent, and registering a prediction that no such agent will be able to solve real-world problems like "opening a coconut" with the level of versatility that a human can.

The behavior of a brain, and the methods by which that behavior is caused by the information it processes, are a part of the physical world and subject to the same physical laws as all other objects we interact with. This is nigh-universally accepted in physics and philosophy. A few examples:

- Physical damage to the cerebellum causes people to develop a strange walking gait; the same gait that drunk people have, because alcohol impairs the cerebellum.

- Damage to Broca's area of the brain causes people to lose the fluidity and grammaticalness of their sentences, while maintaining their logical validity. Damage to Werneke's area of the brain by contrast causes their speech to lose its logical validity and meaningfulness, while maintaining a fluid and normal-sounding sentence structure.

- Survivors of traumatic brain injuries often have changes to their cognition and behavior, such as amnesia, trouble focusing, etc.

- Individual neurons can be trained using classical conditioning, separate from the rest of the brain.

- Several chemicals, such as caffeine, can improve people's cognitive performance on tests, decrease their reaction time, and have other positive (and negative) effects. Non-chemical interventions can have similar effects, such as pulsing magnetic fields through the brain at a specific frequency.

- Playing ultrasound through the brain can make people hallucinate flashes of light, twitch their muscles, feel a tingling sensation, and can affect their mood.

- Physical damage to the brain can, in certain cases, increase that person's capabilities. People have developed sudden musical talent and other skills after brain trauma.

- Psychadelics can make people more open-minded.

- When people's limbs are paralyzed partway through life, they start losing their memories of having used those limbs when healthy.

- People who have had the connection between the lobes of their brain severed seem to have a sort of "split consciousness", where they can't consciously compare two things that were seen by different eyes, and sometimes their hands will try to do opposing things.

- Brain imaging technology allows scientists to determine what image someone is thinking about, to predict what button they're going to push up to ten seconds in advance, to know what move they'll make in a game of rock-paper-scissors before they play it, and more.

- Low oxygen to the brain causes people to lose the ability to do complicated cognitive work like mental arithmetic.

- Brain damage can cause people to lose limb function, and also to be unaware of this. When asked to move their arm to prove they can, they'll confabulate some excuse, like that they don't want to. Squirting cold water in their left ear temporarily removes the cognitive impairment, and allows them to realize that their arm is paralyzed. Then a few minutes later they'll go back to unawareness, and forget what just happened.

- Non-physicalist philosophers are suspiciously protective of the physical integrity of their skull and brain.

So while philosophers can argue to no end about where qualia come from and whether changes in those qualia are caused by physics or a pre-established harmony between the physical brain and the epiphenomenal consciousness, none of that is relevant here. An organism's observable behavior is determined solely from the physical construction of its brain and the information fed into the brain.

#2: Quantum mechanics is computable

The Schrödinger equation can be computed to arbitrary precision, so quantum mechanics is computable. That is, you can program a computer with the initial state of any physical system, and compute its state after any amount of time. And indeed, people do that all the time.

This fact combined with the physicality of brain function logically imply that the decisions of an "organismic" brain are computable. So if the philosophy paper in question is correct, it disproves quantum mechanics.

Now in a sense, we already know that quantum mechanics is wrong; it's incompatible with general relativity when it comes to exotic systems like black holes. But this paper proves that it's wrong in a much more mundane way; the brain of every insect on Earth violates quantum mechanics. This should revolutionize the field of fundamental physics! No more do the scientists at CERN need billions of dollars for particle accelerators and black hole telescopes; they can directly observe violations of quantum mechanics with a simple electron microscope.

Implications

Of course I don't actually think that quantum mechanics has been disproven. I think the philosophy paper contains an error. I didn't bother finding the exact point of error, for the same reason that mathematicians generally don't bother looking for the exact mistake in supposed proofs of P = NP.

This particular paper was published in a journal that's known to be low quality, so it's not too surprising that neither of the peer reviewers drew attention to the obvious issue.

"Since we have seen no argument brought forward by the AI theorists for the assumption that human behavior must be reproducible by a digital computer operating with strict rules on determinate bits, we would seem to have good philosophical grounds for rejecting this assumption."

This, just like the more recently-published paper above, should have been laughed out of the room. Schrödinger published the foundations of quantum mechanics in 1926, Turing formalized computability theory in the 1930s, and both were well-understood and accepted by 1972. But instead, Dreyfus's book — apparently somehow published without him ever hearing the obvious counterargument from someone with a basic knowledge of physics — became a widely-read and praised work of philosophy.

I think this points to a deeper issue. Philosophy, mathematics, and physics are the three most fundamental fields of inquiry; humanity's attempts to truly understand the world in which we live. But philosophy ends up the odd child out.

Physics has empiricism. If your physical theory doesn't make a testable prediction, physicists will make fun of you. Those that do make a prediction are tested and adopted or refuted based on the evidence. Physics is trying to describe things that exist in the physical universe, so physicists have the luxury of just looking at stuff and seeing how it behaves.

Mathematics has rigor. If your mathematical claim can't be broken down into the language of first order logic or a similar system with clearly defined axioms, mathematicians will make fun of you. Those that can be broken down into their fundamentals are then verified step by step, with no opportunity for sloppy thinking to creep in. Mathematics deals with ontologically simple entities, so it has no need to rely on human intuition or fuzzy high-level concepts in language.

Philosophy has neither of these advantages. Notice how broad the spread of disagreement is among philosophers on basically every aspect of their field, compared to mathematicians and physicists. This doesn't mean it's unimportant; on the contrary, philosophy is what created science in the first place! But without any way of systematically grounding itself in reality, it's easy for an unscrupulous philosopher to go off the rails.

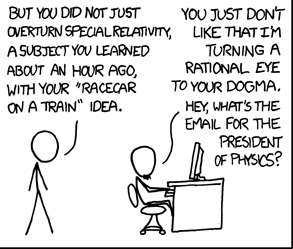

Consider the classic joke:

A university dean, to the physics department: "Why do I always have to give you so much money for all this expensive equipment? Why couldn't you be like the math department - all they need is pencils, paper, and waste-paper baskets. Or even better, like the philosophy department. All they need is pencils and paper."

The lack of a objective way to tell good philosophy from bad has led to a culture where a philosopher can say complete nonsense, and as long as it's written with sufficient academic jargon, it'll be publishable and treated with respect by the rest of the field.

A habit of respecting diverse views and being skeptical of your own intuition is of course a good thing when there's no way to tell for sure which of those views is correct, but just because most claims are unverifiable doesn't mean that all of them are. Some philosophical work is legitimately just bad, and philosophy's "all beliefs are equally valid" ethos has a tendency to be applied too broadly. Any new idea should always be given serious consideration, but if it contains basic logical flaws or contradicts empirical evidence, it's ok to reject it.

This is not a big deal when philosophy is a purely academic exercise, but it becomes a problem when people are turning to philosophers for practical advice. In the field of artificial intelligence, things are moving quickly, and people want guidance about what's to come. Do we need to be worried about unethical treatment of AI systems? Does moral realism mean that highly intelligent AI will automatically prioritize humans' best interests, or does the is-ought problem allow it to have its own values that are opposed to ours? What do concepts like "intelligence" and "values" actually mean?

These questions are very important, and purported answers to them that don't engage with reality are not only embarrassing to the field of philosophy, but risk having serious negative consequences if they're acted upon as though they're reliable predictions.

What Computers Can't Do, for example, predicted that it was impossible for computers to recognize faces out of a larger image (proven wrong in 1993), to have any mathematical formalization of general intelligence (proven wrong in 2005), that the amount of information stored in the environment might be infinite (proven wrong in 2008), that any AI that can speak in natural language would have to have a physical body (proven wrong in 2020), along with many other less definite predictions that were too vague to be conclusively proven wrong, but certainly did not seem to hold up very well.

It will never be the case that everyone believes the philosophers pushing these views, so the field of artificial intelligence will advance regardless of their protestations. But when members of the public are misled by this sort of wishful anthropocentrism, it creates the risk that society will be deeply unprepared for future breakthroughs.

Philosophy is an extremely challenging discipline; perhaps the most challenging of all. Figuring out the right answer is not easy, and for many questions it's possible we'll never truly know. But that doesn't mean that all possible answers are equally valid. Especially when it comes to the more empirical subfields of philosophy, some claims are just demonstrably nonsense. And the field's unwillingness to call out and sanction crackpots when they publish these sorts of papers does everyone a disservice.

Karl Marx famously said that philosophy is supposed to actually benefit the world, not just endlessly "interpret" it. If philosophy wants to live up to this ideal, it needs to get its house in order.

"Thus, insofar as the question whether artificial intelligence is possible is an empirical question, the answer seems to be that further significant progress in cognitive simulation or in artificial intelligence is extremely unlikely."

-Hubert Dreyfus, 1972